6 Facts About Oprah Winfrey, the “Queen of All Media”

Original photo by Maximum Film/ Alamy Stock Photo

From her rise to prominence by way of a quarter-century run as the host of an eponymous talk show, Oprah Winfrey has undertaken an extraordinary career journey that made her, among other things, the first woman to own and produce her own talk show, a 2013 recipient of the Presidential Medal of Freedom, and one of the few celebrities famous enough to be known solely on a first-name basis. Here are six more facts about the wildly successful screen personality, entrepreneur, and philanthropist appropriately known as the “Queen of All Media.”

Her Real First Name Is “Orpah”

According to her birth certificate, we’ve been saying the media queen’s first name incorrectly all this time. Born in January 1954 in Kosciusko, Mississippi, the future TV host was named “Orpah,” after a woman in the Bible’s Book of Ruth. However, the unusual name immediately caused confusion among family, who adopted a slightly different version of the moniker for their newest member. As she later explained during one early audition tape, “No one knew how to spell in my home, and that’s why it ended up being Oprah.” Her birth certificate still reads “Orpah,” but to everyone who knows her — which now includes millions of fans around the world — she’ll always be “Oprah.”

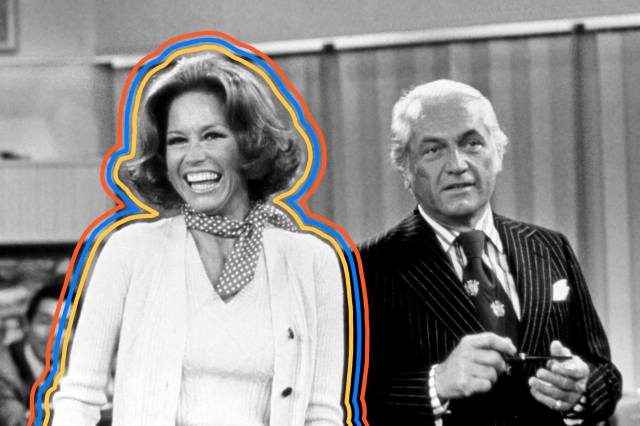

Oprah Initially Wanted No Part of Daytime Talk Television

A few years after beginning her TV career as a news anchor at age 19, Oprah was dismayed to learn that executives at Baltimore’s WJZ-TV wanted her to co-host a daytime talk show. (She reportedly worried that she “wouldn’t be taken seriously as a journalist.”) According to Kitty Kelley’s Oprah: A Biography, the newscaster begged her bosses to reconsider and left their meeting “with tears in her eyes” when she realized she had no other option. But something clicked while she conducted interviews during the August 1978 debut of People Are Talking, and Oprah realized that “this is what [she] was meant to do.”

A Date With Roger Ebert Sent Her Career in a New Direction

Fans are well aware of Oprah’s long-term relationship with Stedman Graham, but lesser known is her brief but consequential dating history with famed movie critic Roger Ebert. During one dinner together in the mid-1980s, Oprah revealed her uncertainty on how to handle offers to take her show into national syndication. Ebert, by then already a TV veteran as co-host of At the Movies, did some quick calculations that showed the staggering amount of money she stood to earn from a syndication deal. The financial tip wasn’t enough to save their fledgling romance, but it did point Oprah in the right direction and pave the way for the syndicated launch of The Oprah Winfrey Show in September 1986.

She Made Her Film Debut With an Assist from Quincy Jones

Her early television success notwithstanding, Oprah remained hopeful of realizing her dream to become an actress. She finally got the opportunity she’d been waiting for in the mid-1980s, when music producer Quincy Jones, who was trying to pull together a big-screen adaptation of Alice Walker’s Pulitzer Prize-winning novel The Color Purple, was captivated by the then-still-relatively-unknown TV host and recommended her to his casting agent. Despite her lack of professional acting experience, Oprah wound up with the part of Sofia, a choice that was validated when she earned a Best Supporting Actress Academy Award nomination for her performance in the 1985 film. She went on to found her own production company, Harpo Productions, through which she continued to satisfy her acting ambitions, with roles in movies including Beloved (1998) and Selma (2014). She also earned acclaim for her performance in The Butler (2013), and starred alongside Reese Witherspoon and Mindy Kaling in 2018’s flashy film adaptation of Madeleine L’Engle’s A Wrinkle in Time.

Oprah Acted on an Employee’s Tip To Launch Her Book Club

Among the most popular recurring segments of her show, Oprah’s Book Club grew from a love of reading shared with an intern named Alice McGee. After several years of bonding over the books they were enjoying, McGee, who had risen to become a senior producer, suggested to Oprah that they open up the literary discussion to audience members. Although her team was hesitant to support the idea at first, they determined that it could work if audiences were given enough time to read a book. So on September 17, 1996, Oprah’s Book Club hit the airwaves with her recommendation of Jacquelyn Mitchard’s The Deep End of the Ocean.

In the 15 years that followed, Oprah recommended some 70 books, from Toni Morrison’s Paradise and Edwidge Danticat’s Breath, Eyes, Memory to John Steinbeck’s East of Eden and Leo Tolstoy’s Anna Karenina. The segment encountered a few bumps along the way — most famously when the September 2005 selection A Million Little Pieces, by James Frey, was revealed to have been partially fabricated, despite being marketed as nonfiction — but its success and influence were undeniable. Authors whose books were chosen often saw massive increases in sales, a boost that became known as the “Oprah Effect.” The original Book Club ended with her show in 2011, but recent years have seen newer iterations in O, The Oprah Magazine and on Apple TV+.

Oprah Despises Chewing Gum

Oprah has famously shared a list of her favorite things almost every year since the 1990s, first on her eponymous talk show, and then in O, The Oprah Magazine and, more recently, on her Oprah Daily website. One thing you’ll probably never see on the list? Chewing gum. As she shared during a 2018 appearance on The Late Show With Stephen Colbert, she “intensely” dislikes the stuff. Her aversion stemmed from a grandmother who left gum all around the house. “She would put it on the bedpost,” Oprah recalled. “She would put it in the cabinet. She would put it everywhere around. And so as a child, I would bump into it, and it would, like, rub up against me.” The media queen has since attempted to eradicate any traces of gum from her life, with limited success: “It’s barred at my offices, nobody is allowed, but when I go out into the world, I can’t bar it,” she told Colbert. “It’s a thing. It creeps me out.”

Tim Ott has written for sites including Biography.com, History.com, and MLB.com, and is known to delude himself into thinking he can craft a marketable screenplay.

top picks from the optimism network

Interesting Facts is part of Optimism, which publishes content that uplifts, informs, and inspires.